Introduction

As video games get increasingly complex, and with productions and projects handling huge amounts of data for next-gen games, it's essential to have a way to store and validate game data in an organized and efficient manner, especially, when sending this data across different DCC (Digital Content Creation) packages. JSON (JavaScript Object Notation) is a lightweight data-interchange format that has become a popular choice for storing some of this data.

Data inaccuracies can be extremely disruptive to tools and pipelines, not to mention the frustration that comes with manually coding validation functions to safeguard your data's integrity. In this article, we'll explore how to use Python and the Pydantic library to validate JSON files.

Topics Covered

- Setting up Pydantic

- Creating models

- Validating JSON files with Pydantic

Disclaimer

- Some basic Python knowledge is needed.

- If you like how classes are written with pydantic but don't need data validation, take a look at the dataclasses package.

- Pydantic is a very versatile library and offers a huge set of tools, I will only be covering the basics to get you started.

Setting up Pydantic

Pydantic is a Python library that validates data structures using type annotations. It simplifies working with external data sources, like APIs or JSON files, by ensuring the data is valid and conforms to expected data types. To get started with Pydantic, we'll need to install it using pip. I will be working with PyCharm in a Virtual Environment so you can do one of the following:

- Ctrl + Alt + S to open Settings. Look for Python Interpreter, click the + icon, search for pydantic, and click Install Package.

or

- Go to the terminal and run pip install pydantic.

Creating Models

With Pydantic installed, we can now create models for our data. In Pydantic, models are Python classes that define the structure of the data we want to validate.

For example, let's say we have a game with different types of assets, and we want to store information about each one of them in a JSON file. Let's also assume that one of the things we want to store from our asset is the bounds of the asset.

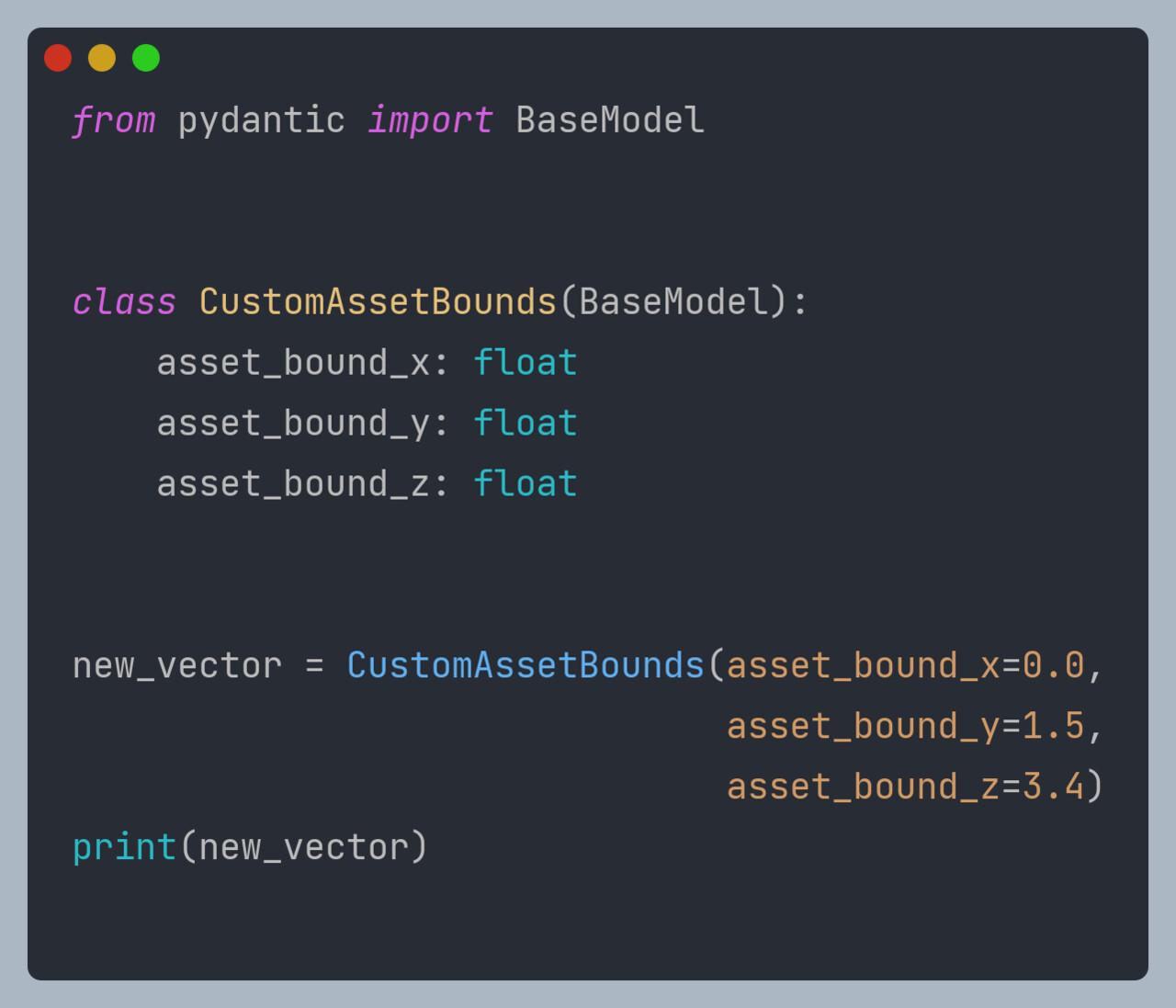

Let's create first a model for our bounds vector:

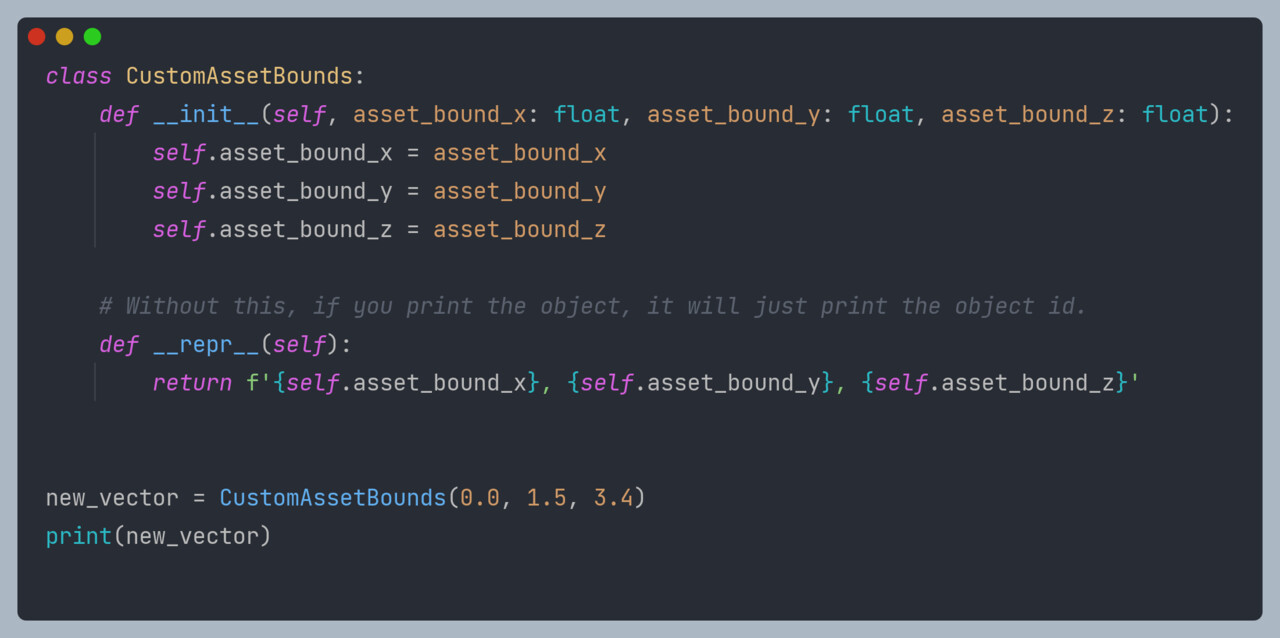

In this example, we can already see a few things, defining the class is extremely clean and simple compared to how it would be with a default Python class:

Using Pydantic for defining classes in Python can make code more concise, less error-prone, and easier to maintain compared to defining classic classes. With Pydantic, you define a class inheriting from BaseModel, and Pydantic generates the __init__ method for you based on the class attributes. You also get a human-readable representation of the object by default, and you can customize it using the __str__ method if needed. This can save you time and effort compared to defining the __init__ and __repr__ methods manually in a classic class.

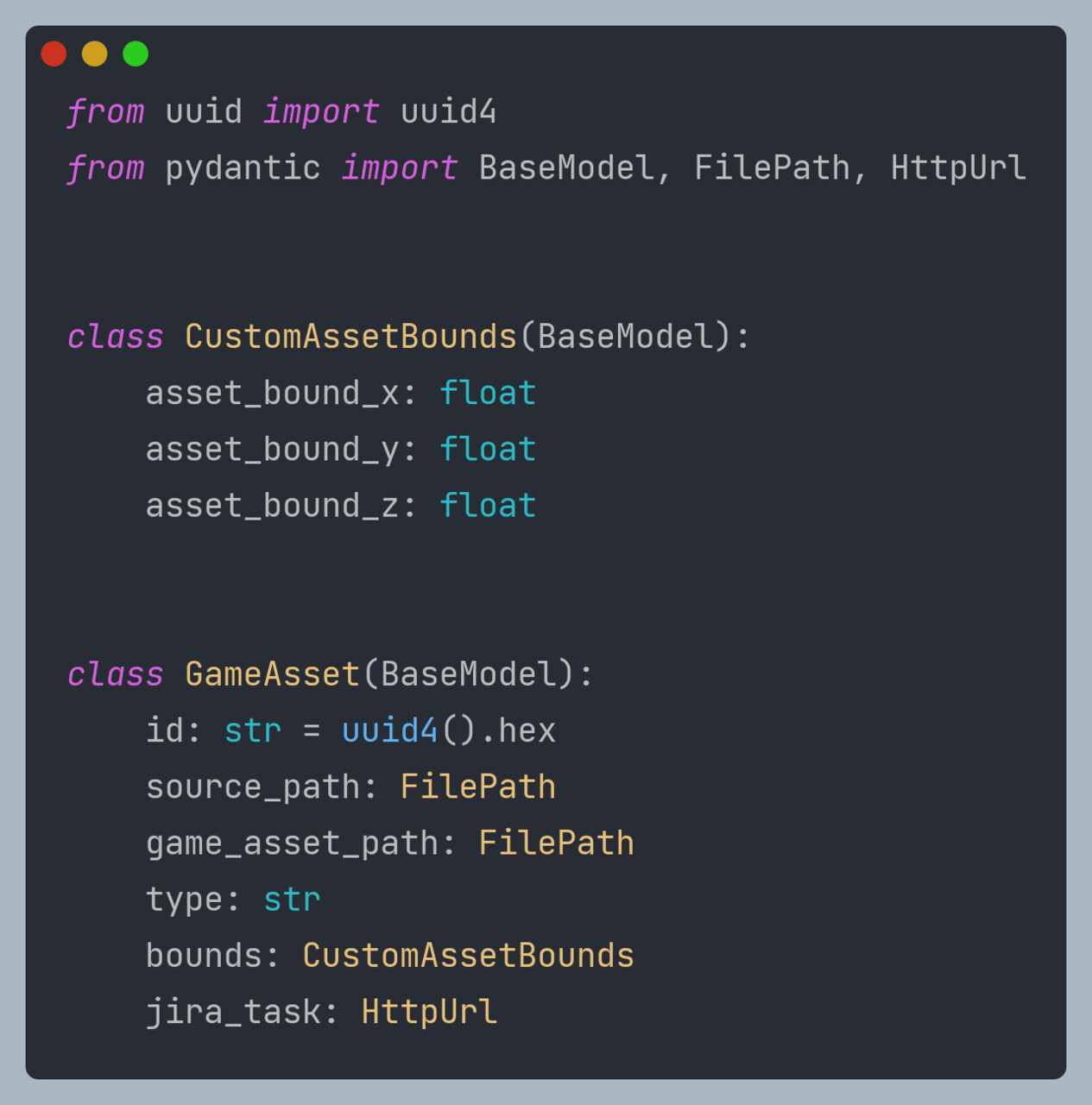

Now let's create our Asset class. For JSON validation, the model attributes should match the data stored in your JSON:

In this example, we have created a class GameAsset that inherits from BaseModel with a few different annotations:

- id (str): Unique ID for each asset using the UUID library.

- source_path (FilePath): String that contains the path to the source of this game asset.

- game_asset_path (FilePath): String that contains the path to the game asset. If we were working in UE for instance, it would point to a .uasset.

- type (str): String defining what type of asset this is, for example, a 'Rock'.

- bounds (CustomAssetBounds): This stores an instance of our custom asset bounds class.

- jira_task (HttpUrl): This could store the link to the Jira task for this asset. It is just an example to showcase different pydantic features.

The type annotations tell Pydantic what type of data to expect in each field.

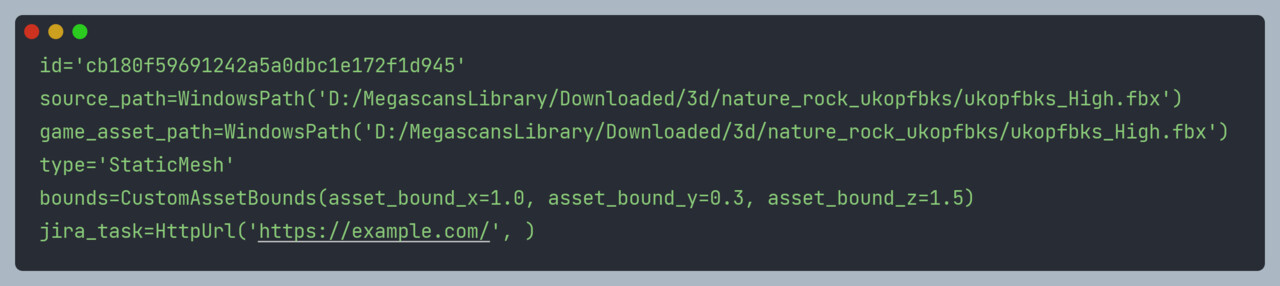

Let's now create a GameAsset object:

Now so far this sounds great but probably boring, and other than cleaner code, there seems to be no other advantage. If we run the code we get our object printed.

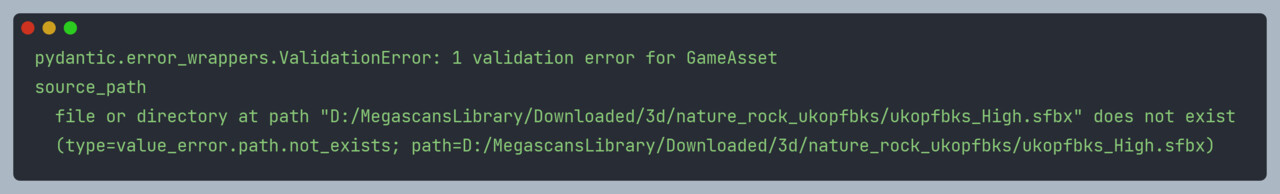

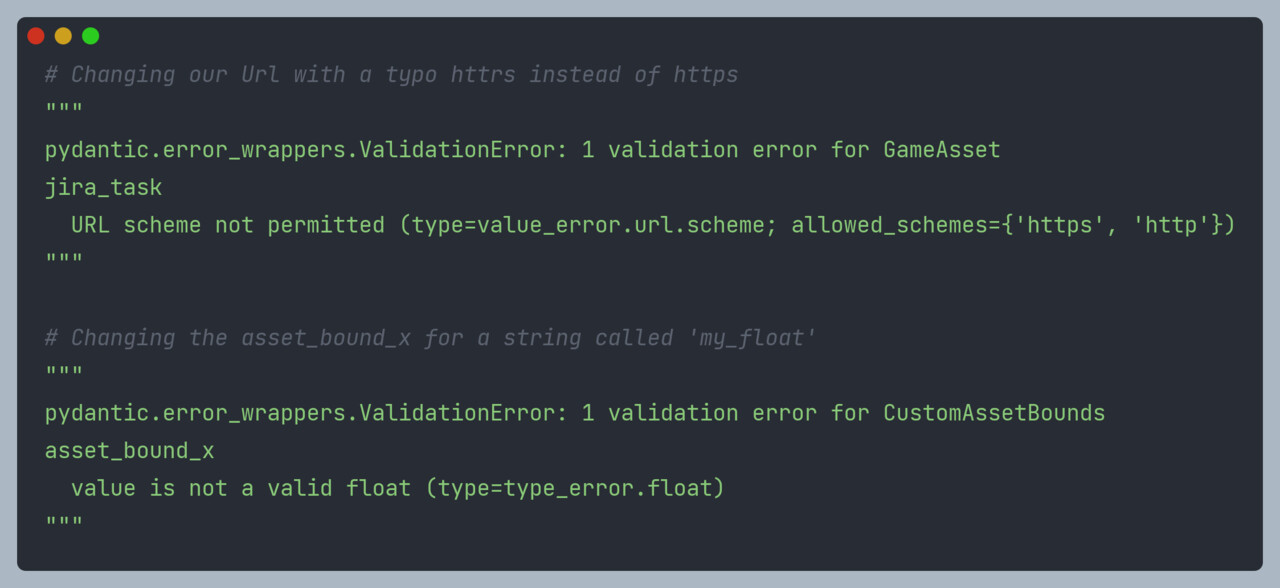

Pydantic's true power becomes apparent when we introduce errors into our data. Let's change one of the paths for one that does not exist in our project and let's run the code again.

As we can see, we receive an automatic validation error. Out of the box. Similarly, we can easily test other annotations such as HttpUrl or float, and Pydantic will raise errors if the values provided do not conform to the expected format. The ease of use and built-in data validation make Pydantic a valuable tool for developing robust and reliable code in Python.

Validating JSON Files with Pydantic

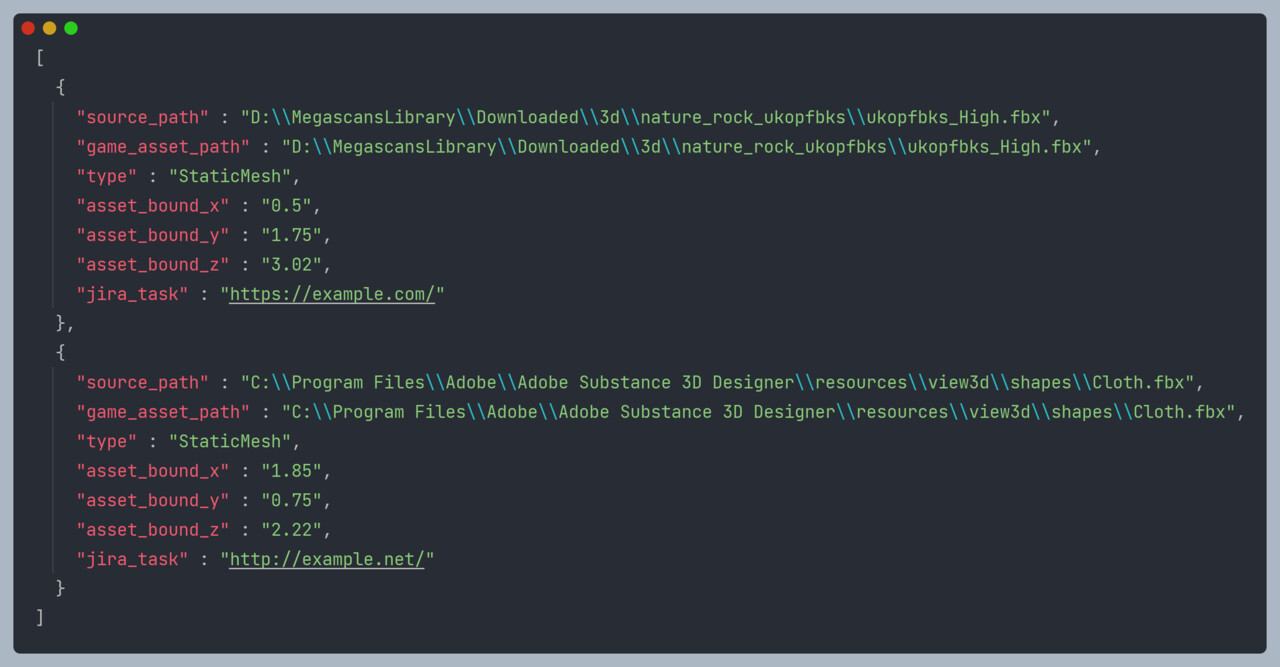

With our models defined and essential features highlighted, let's move on to a more complex example to explore some of Pydantic's capabilities. Suppose we have a hypothetical JSON file containing asset data named city_assets.json:

Note that the data presented in the city_assets.json file in the following example does not represent any meaningful or relevant information. It serves only to demonstrate how Pydantic can be used to validate and process data in a Python program.

We can use the following code to validate this JSON file with Pydantic:

In this code, we expand our GameAsset class that represents a game asset and contains information about its identity, location, type, and bounds. The class still has attributes such as an ID, the path to the source file for the asset, the path to the asset within the game directory, the type of asset, the bounding box dimensions, and a Jira task URL. It also includes a custom error message that is raised when the source path is not an FBX file.

The pydantic library is used to define data validation on the GameAsset attributes, where I introduce the validator decorator which ensures that the source_path attribute is validated by verifying that it is a valid .fbx file.

Subsequently, we retrieve the data stored in a JSON file named city_assets.json and leverage the GameAsset class, along with list comprehension, to generate a collection of GameAsset objects from the retrieved JSON data.

List comprehensions are a concise way of creating lists in Python. They allow you to generate a new list by applying an expression to each item in an existing iterable, such as a list or a range. The basic syntax of a list comprehension is as follows:

new_list = [expression for item in iterable if condition]

Here, expression is the operation or calculation to be performed on each item in the iterable. The if statement is optional, and allows you to filter the results by a condition.

For example, let's say you have a list of numbers and you want to create a new list with the squares of those numbers. You could use a for loop to do this as follows:

numbers = [1, 2, 3, 4, 5] squares = [] for number in numbers: squares.append(number ** 2)

Using a list comprehension, the same result can be achieved in a more concise way:

numbers = [1, 2, 3, 4, 5] squares = [number ** 2 for number in numbers]

Conclusion

Pydantic is a powerful tool for validating JSON files and data in general in game development. By using models and type annotations, we can ensure that our data is well-organized and consistent. It allows us to create classes that describe data structures and easily define defaults, making it easier to maintain and modify classes. It also offers attribute customization and error handling with user-friendly error messages. It provides helpful helper methods to export models such as json(), dict() and to create JSON Schemas with schema().

In addition, Pydantic is known for its speed and efficiency due to its use of advanced parsing and validation techniques. Pydantic uses Python's type annotations to create a fast, low-overhead validation system that can quickly and accurately check that data conforms to a specific structure.

However, Pydantic's validation only checks whether the data has the expected types and constraints; it does not check whether the data itself is intrinsically correct. For example, if we take the Pydantic model GameAsset we created in the example and look at the attribute game_asset_path, Pydantic will only check whether the value passed to that attribute is a valid FilePath, but it won't check whether the file itself is a valid game asset path or not. This means that if the data passed to Pydantic has the correct data types and adheres to the constraints defined by us, Pydantic will consider it valid, even if the data itself is incorrect or inappropriate.

To ensure that the data content is also correct, we must perform additional checks and validations, beyond the ones provided by Pydantic using the @validator decorator for example.

With all of this in mind, whether you are creating a small indie game or a large AAA title, using Pydantic for data validation is a great way to ensure your data is reliable and well-organized. By combining Pydantic with additional checks and validations, we can exchange data between different DCC packages with accurate, appropriate data that provides the best possible user experience for developers and artists.

Resources

- Pydantic Tutorials: https://pydantic-docs.helpmanual.io/usage/tutorials/

- Pydantic Official Documentation: https://pydantic-docs.helpmanual.io/

- Pydantic GitHub Repository: https://github.com/samuelcolvin/pydantic

- Pydantic PyPI page: https://pypi.org/project/pydantic/

- Pydantic FAQ: https://pydantic-docs.helpmanual.io/usage/faq/